Group member: Elif Ergin

See my previous blog post about this project here.

Ideation

Ok, so this project took a weird turn, but it could always be weirder because Elif and my original-original idea was a Zac Efron Cornell Box. So consider yourselves lucky! We kept on thinking about our previously decided theme “summer dream” but no concrete ideas came to mind for what we wanted in our Unity project. What started as a joke ultimately became our project; we were inspired by the song “Summer Wine” and now we are giving our feet pics away for free!

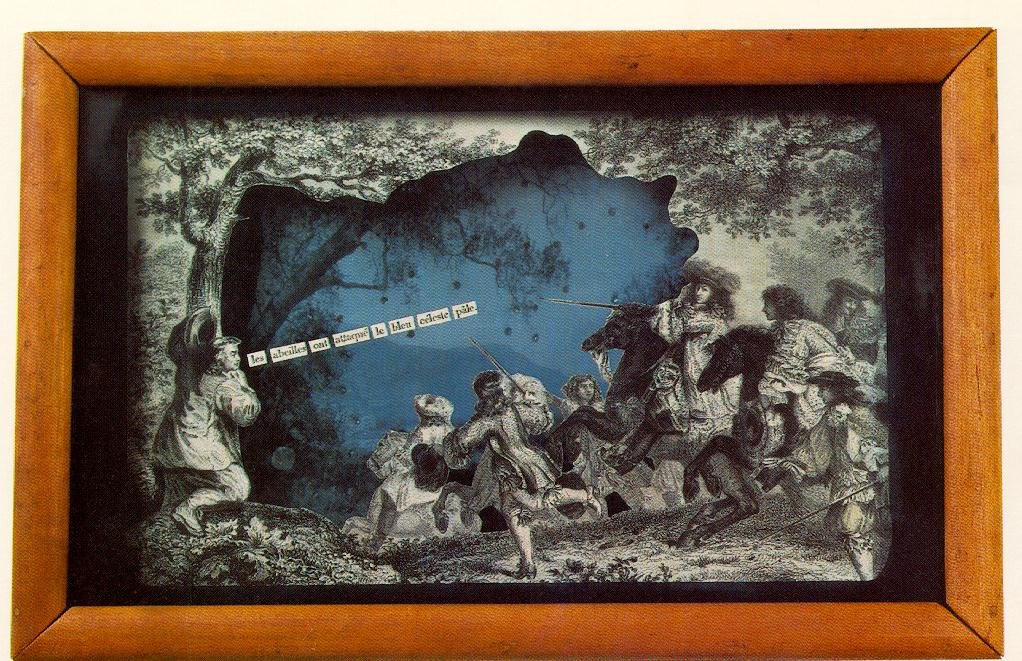

We had done a bunch of research on existing Cornell boxes and it was interesting to see that a lot of different artists made them. We were really inspired by the physical boxes, all their compartments, layered feel, and the use of found objects, so we tried to incorporate some of these aspects in our own piece.

Fabrication

1. Find the perfect box at Michaels.

2. Laser cut embellishments and shelves from 1/8” plywood.

3. Use the orbit sander to remove the burns from the laser cutter.

4. Check that the shelves and the screen (iPad) fit in the box.

8. Reassemble box lid. Install shelves using wood glue. Apply mirrored sheet to glass pane.

11. Assemble!

5. Remove excess saw dust and prep the area for staining all the wood.

9. Collect all kinds of diorama materials.

6. Stain the frame embellishments.

7. Remove the box lid and stain the wood.

10. Collect all kinds of miniature thingies.

Unity

For the digital Cornell box, I was thinking about the summer wine concept and remembered this coke commercial that I always see when going to the movies. It kind of lead the design of the story of our box.

Collect Assets

Neither Elif nor I had much experience working in Unity before this so we started by rounding up assets for our box and hoping it would inspire the final aesthetic. We searched through the Unity Asset Store for free 3D assets that reminded us of summer. It was also important for us to be a part of our Cornell box so we filmed our own eyes and stomping feet (to make the wine). Elif used the magic of Runway to remove the background from our videos.

Create Basic Box

We used the box asset supplied to us in class and made it the same aspect ratio as the iPad. I found some stock sky footage and used that as our box’s wall paper. Then I went on to make all our different grapes.

I made a standard purple and see-through grape material and parented the spheres to the objects floating inside them so that they would always move together. I also found a disco ball material and Elif helped me wrap our eye videos around other spheres as well.

We also got our feet videos to play on planes in front of our box. I really like the surreal style of the 2D feet existing in a 3D world, I think it’s kind of funny!

Scripting

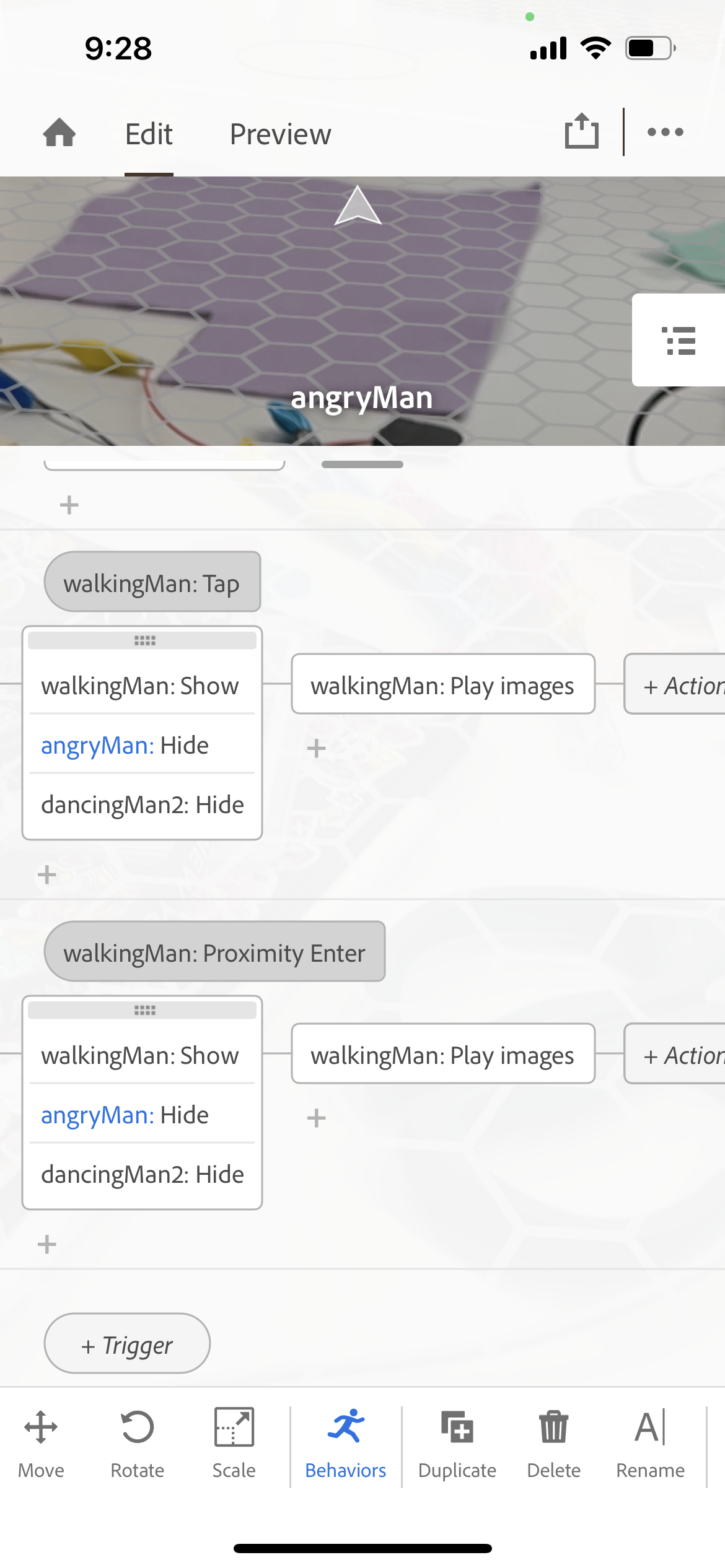

Now, on to making things move! I started with the “InstantiationKeyRandomLocation” script we received in class. I changed it so that a grape would spawn on mouse click instead of pressing the space bar. We were hoping the mouse click would translate to an iPad tap once built for iOS and, spoiler alert, it does!

So after getting one grape to spawn I wanted to update the script to randomly choose one of the grapes we created. I found a really helpful video of how to create an array of GameObjects. On click, the script chooses a random location in this array and spawns that type of grape.

After this, we ran into the issue that spawning too many grapes at once will overload computer and crash Unity. I used a destroy timer to delete grapes a given number of seconds after spawning.

I also thought hard about the foot-grape interaction. Obviously, the ideal solution would be to make the grapes look stomped on over time and some liquid wine appear but this was a stretch for us because of our limited knowledge of working in Unity. I thought the easiest solution would be to make the grapes slowly shrink and disappear into nothing so I wrote a coroutine which would scale the spawned grapes in parallel to running the main loop.

Here are the two functions I wrote to get the interactions working for our box:

Finishing touches

We were able to get some office hours with Gabe and he suggested we add some camera effects in post. We learned in class that we had to download the post processing package from the Unity package manager. We added the chromatic aberration effect which added a blurry dreaminess around the edge of the camera and the ambient occlusion effect which darkened the shadows of the box and added some depth.

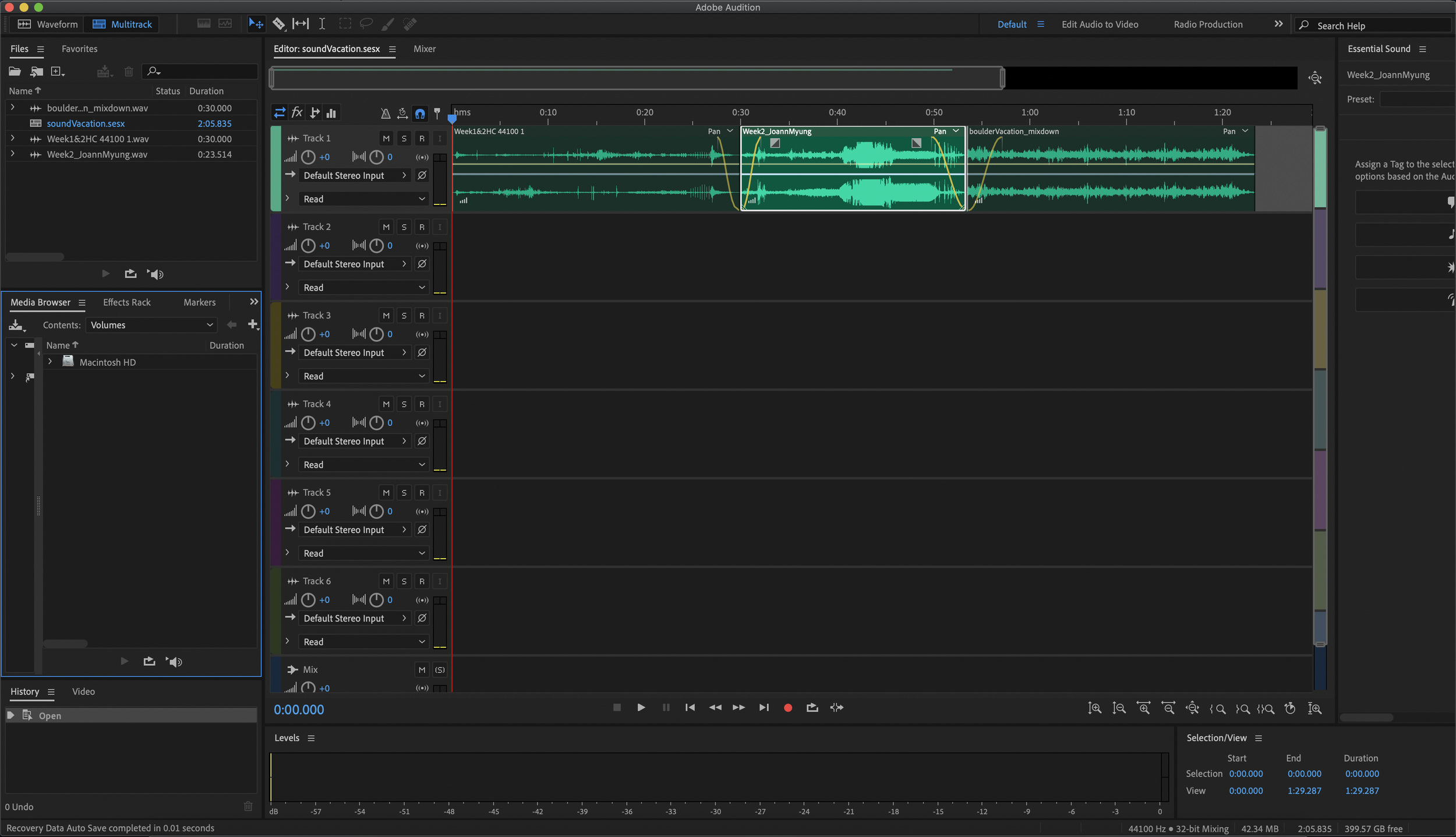

Lastly we considered the sound of the piece. We both really liked how each filmed video element played its own sounds and how the sounds layered and multiplied when more grapes were generated. In the background we’re just chatting and talking ourselves through the filming process but there are sound clips where we are discussing summer memories. I decided to add a background squishing sound to evoke the grapes being pressed into wine.

Building for iOS

So that we could display our Unity project on an iPad I followed this tutorial to build our project for iOS. The first step is to install iOS build support as shown in the screen capture below.

Then I needed to download Xcode and accept the Apple developer agreement. This part took the longest.

Once your “File” > “Build Settings” looks like this you’re ready to build for iOS with Xcode. Save the build in your project folder.

Then, I opened my newly built Xcode project by double-clicking the file with the “.xcodeproj” extension. Once in Xcode, I connected my device (in this case, my iPad) to my computer and selected it in the top drop down. I updated the app name, version number, and build but most importantly I chose “Personal Team” on the “Signing & Capabilities” tab. This basically means that I’m making this app for personal use and don’t intend to submit it to the Apple App Store.

All that’s left is to press the play button in the upper left of the window and the Unity app built to my iPad.

When I first tried to use my Unity app I got an “Untrusted Developer” pop up. I was able to trust myself as a developer by going into my iPad’s general settings. I also had this super weird bug in the first couple builds of this project that our feet seemed to be sensored (they weren’t showing up in our app). I had to change the video’s codec in Unity and keep the alpha values to have the iOS build match what I was seeing while developing in Unity on my computer.

Final Product

Conclusion

There are a lot of things to consider when making a multi-media installation like this. We tried to keep the design as self-contained as possible but there are scenarios, like when the iPad needs to be charged, we didn’t think of. Also, the iPad Unity project crashes way quicker than the project on the computer when too many grapes are spawned at once. We could reconsider how viewers interact with our box and how we “make wine” in Unity.

On the fabrication side we had metal brackets for installing the frame on our physical box but didn’t end up all that together yet because we realized we wouldn’t be able to stand the box upright anymore. If this was installed in an exhibit somewhere it would definitely need to be hung on the wall at eye level (with the frame attached).

I kind of really love this weird project we thought up and built! And I also really enjoyed working with Elif! I learned so much about making things in Unity and some more about making things in real life.

Resources

https://www.pexels.com/video/blue-sky-video-855005/

https://www.istockphoto.com/photos/mirror-ball-texture

https://www.youtube.com/watch?v=KG2aq_CY7pU

https://www.youtube.com/watch?v=KlWPedIvwuw

https://www.youtube.com/watch?v=rdvyelwSnLM

https://answers.unity.com/questions/1220094/spawn-an-object-and-destroy-it.html

https://answers.unity.com/questions/1074165/how-to-increase-and-decrease-object-scale-over-tim.html